MTG

A Benchmark Suite for Multilingual Text Generation

👋 Hi, there! This is the project page for NAACL 2022 (Findings) paper: “MTG: A Benchmark Suite for Multilingual Text Generation”.

Check out our paper here.

About MTG

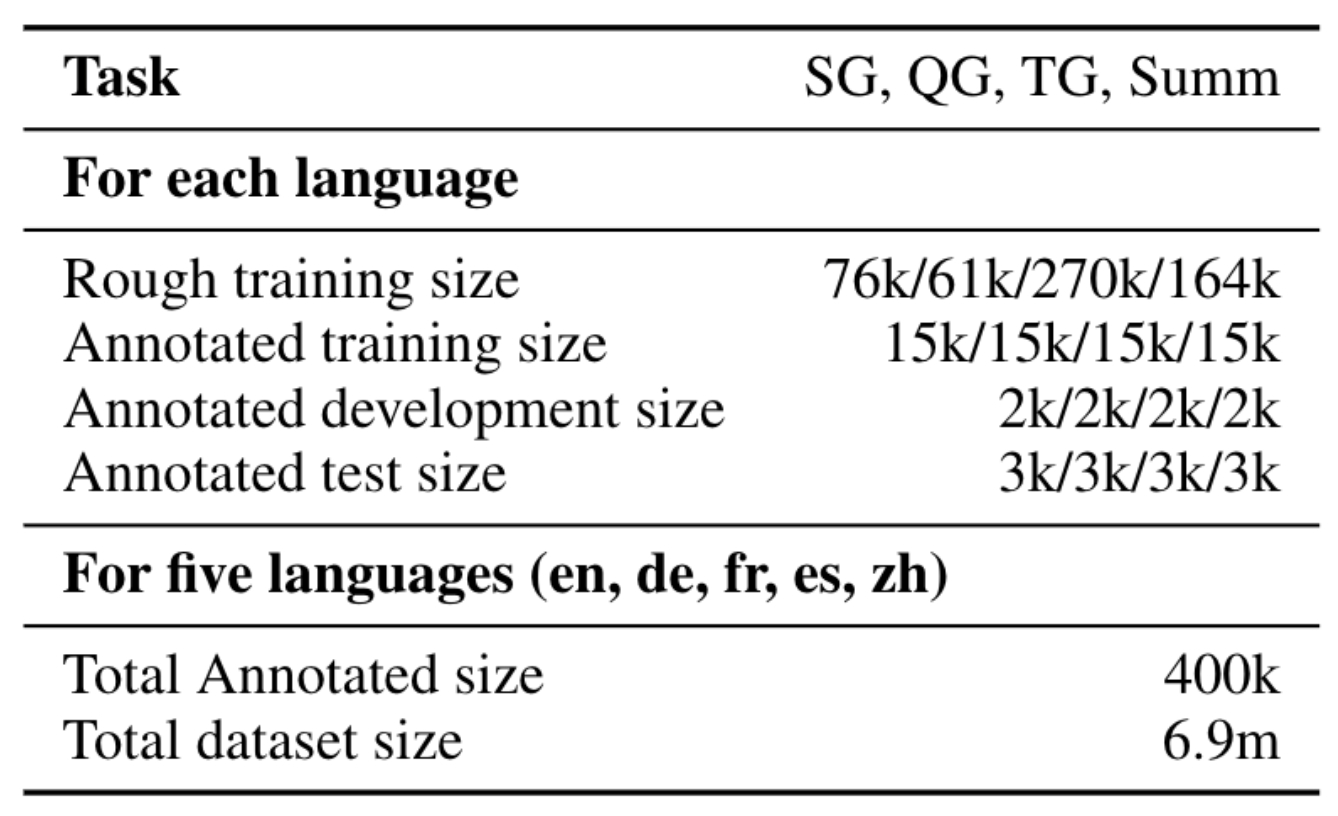

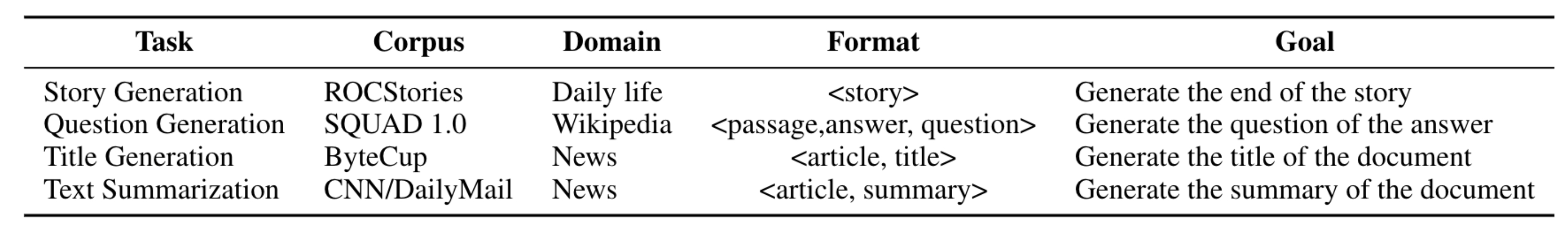

MTG is a human-annotated multilingual multiway dataset. Multiway means that the same sample is expressed in multiple languages. It covers four generation tasks (story generation, question generation, title generation and text summarization) across five languages (English, German, French, Spanish and Chinese).

MTG’s subsets and sample sizes

MTG’s tasks overviews

Download

All

Download link for all tasks.

Question Generation

Generate a correct question for a given passage and its answer.

Story Generation

Generate the end of a given story context.

Text Summarization

Condense the source document into a coherent, concise, and fluent summary.

Title Generation

Convert a given article into a condensed sentence while preserving its main idea.

Evaluation

To evaluate your results on metrics mentioned in our paper, please send the system ouputs to this email. We will send the evaluation results back to you as soon as possible.

The system ouputs files should be named as [TG/QG/SG/Summ]-xxx.json (e.g., TG-crosslingual_res.json). The json file should contain language (en, de, es, fr, zh) as key and the outputs list as value:

{

"en":["system output 1","system output 2"],

"es":[], // results can be empty, it will not be evaluated

}

The results of different systems are displayed in the leaderboard.

Citation & Contact

If you find this work useful to your research, please kindly cite our paper (pre-print, official bibtex coming soon):

@article{chen2021mtg,

title={MTG: A Benchmarking Suite for Multilingual Text Generation},

author={Chen, Yiran and Song, Zhenqiao and Wu, Xianze and Wang, Danqing and Xu, Jingjing and Chen, Jiaze and Zhou, Hao and Li, Lei},

journal={arXiv preprint arXiv:2108.07140},

year={2021}

}